The Inflection Point for Ethernet AI is coming!

Written by Mark Harris

Published on October 8th, 2024

The Cost of Networking for AI/ML Build-Outs is Dropping…

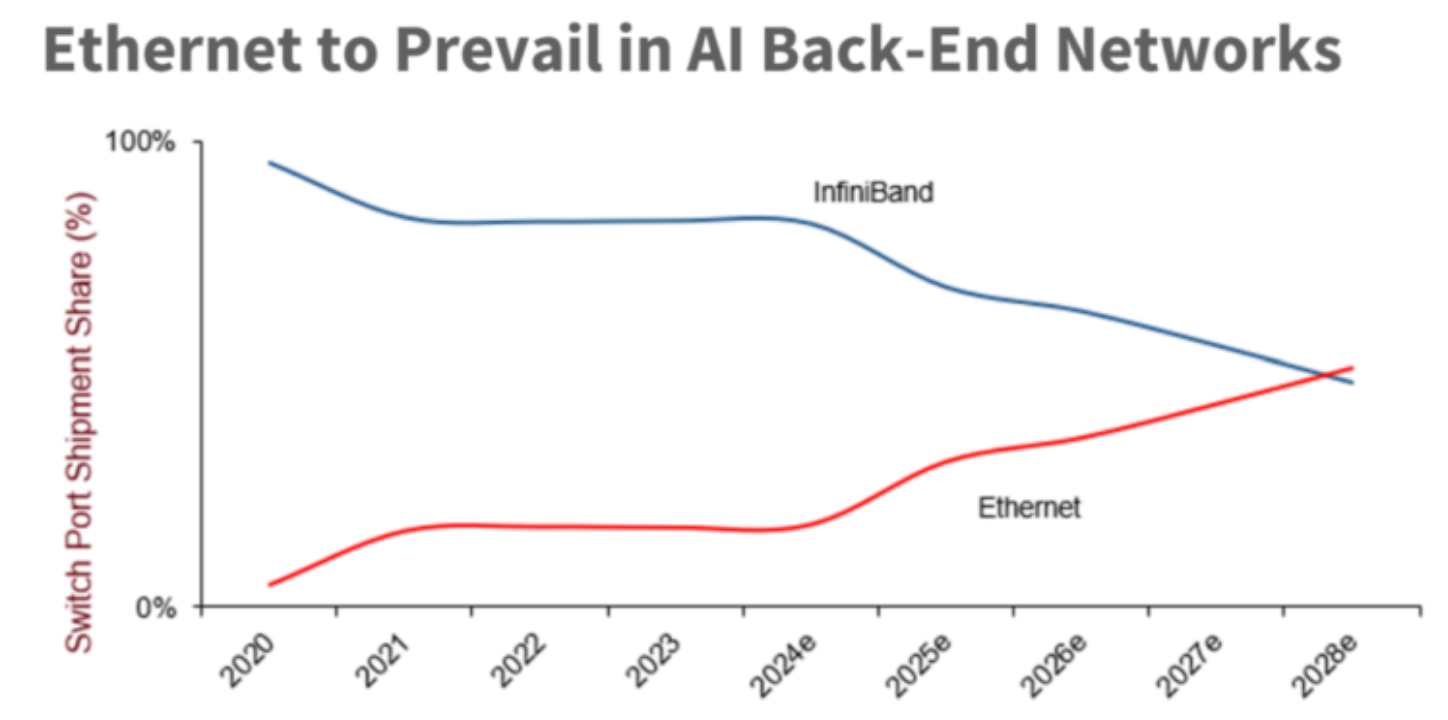

During a September 2024 webinar delivered by Dell’Oro’s Data Center and Campus switching research VP sameh boujelbene, it was pointed out that the mix between InfiniBand and Ethernet for high end computing (including AI/ML) is quickly changing in favor of Ethernet. Sameh went on to say that within 3 years, she expects the cross-over point where Ethernet will overtake the use of InfiniBand for new AI/ML buildouts.

Source: Dell’Oro Webinar, Sameh Boujelbene, 9/19/2024

InfiniBand, which was originally created to break PCIe bandwidth limitations, had always been positioned as the premium-priced media for the highest performance and lowest latency. Ethernet on the other hand had always been viewed as the lower-cost and massively scalable medium, but still lacked the ability to deliver the same level of high performance with low latency. So, it’s a big deal when these fundamentals change….

1) The InfiniBand technology continues to be a premium-priced choice for networking- flying in the face of modern open computing needs. The high price of InfiniBand continues to be a challenge due to the small number of InfiniBand players, with Mellanox, Intel, QLogic and FusionIO (along with a few others) having had their hands in it over the last 25 years, but through M&As, the number of stewards of InfiniBand have dwindled. With such few competitors, costs for InfiniBand remain high.

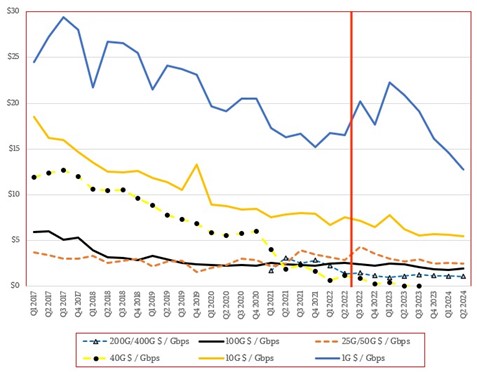

2) Ethernet itself has progressed technically, now meeting or exceeding the link speeds previously only seen with InfiniBand along with low latency enhancements such as RoCE (RDMA over Converged Ethernet) enabling it to now deliver the same class of high-end connectivity needed by AI/ML applications. With such broad availability of leadership Ethernet switching choices, Ethernet costs continue to decrease.

Source: Futurenet.com, Timothy Prickett Morgan, 10/1/2024

So with the decreasing costs of Ethernet offering high-performance and low latency suitable for AI/ML and products like Broadcom’s StrataDNX family of chipsets which can be configured in a Distributed Disaggregated Chassis (complete with Virtual Output Queue management), it simply makes sense to bring Ethernet back to the core of your AI/ML designs for new build-outs.

Contact Us