Intel® DPDK Performance on the SAU5081I Server

DPDK-Enabled Servers to Remove Bottlenecks

This Technology Brief examines the main building blocks of Intel’s Data Plane Development Kit (DPDK) and how it significantly boosts data plane throughput on the Accton SAU5081I Cloud Server platform. Four test cases were used to measure the DPDK throughput enhancement as compared to normal Linux bridging performance.

Applications that implement DPDK reduce overheads in a standard Linux operating system environment, moving network packets from ingress LAN ports to system memory and then to egress ports as fast as possible. This data plane packet processing can significantly boost throughput performance for application software that runs on open-platforms. Increasingly, DPDK-enabled hardware will play an important role in removing bottlenecks in critical network devices within the data center.

SAU5081I Cloud Server Hardware

The Accton SAU5081I is a flexible, high-density server hardware platform designed for cloud data centers. The high-availability system comprises dual compute nodes in a 1RU full-width chassis configuration. Each node is based on dual-socket Intel® Xeon® E5-2600 V3/V4 series processors, supporting up to 14 cores (28 threads) per socket, or up to 28 cores (56 threads) per node, with 4 DDR4 DIMM slots of up to 128 GB memory per socket (256 GB per node). Local storage options include up to 5 SATA III high-speed SSDs, including support for Intel® RSTe and RAID. The SAU5081I also includes optional dual or quad 10 Gbps SFP+ network ports for high-bandwidth connections to the network.

Inside Intel®’s DPDK

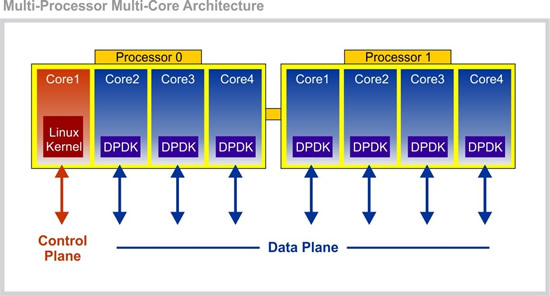

Intel®’s DPDK is basically a set of software development libraries that can be used to build high-performance applications to run on Intel®-based network appliance platforms. The DPDK components leverage Intel® CPU multi-core and multi-processor architecture for optimized data-plane throughput.

The main components of the Intel® DPDK libraries can be summarized as follows:

- Memory Manager: Allocates memory for pools of objects. Each pool implements a ring to store free objects and the manager ensures that objects are spread equally on all DRAM channels.

- Buffer Manager: Pre-allocates fixed-size buffers stored in memory pools. Saves significant amounts of time that an operating system uses to allocate and de-allocate buffers.

- Queue Manager: Avoids unnecessary wait times by providing fixed-size lockless queues for software components to process packets.

- Flow Classification: Implements an efficient mechanism for placing packets in flows for fast processing to boost throughput.

- Poll Mode Drivers: These are drivers for 1 and 10 Gigabit Ethernet controllers that work without interrupt-based signaling to speed up the packet pipeline.

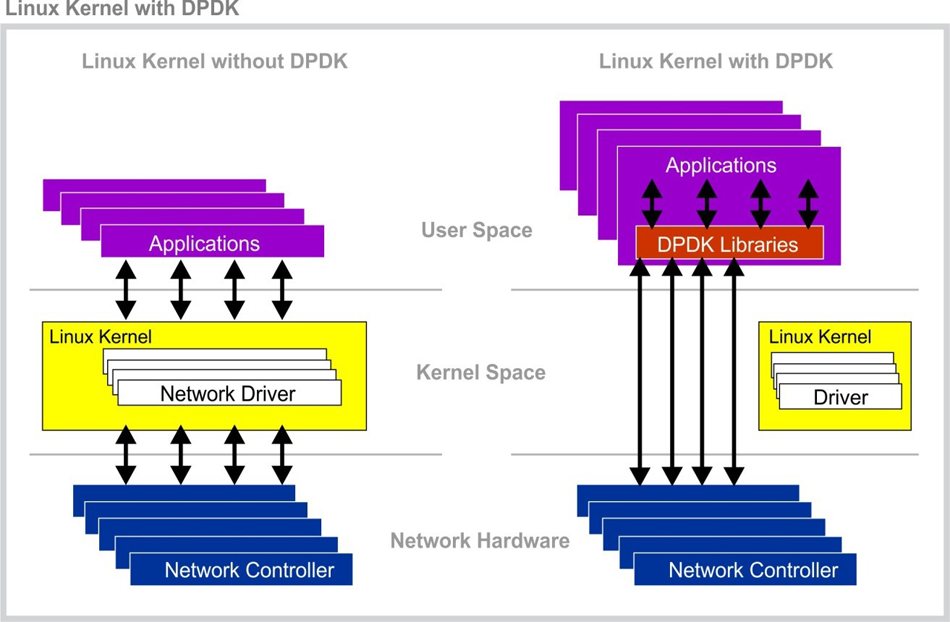

The Intel® DPDK libraries run on open-source Linux systems in the user space, just as any other Linux utilities. This means that when building software applications, the DPDK libraries allow direct access to the hardware without using the Linux kernel. The data plane processing is handled by the DPDK libraries that pass network packets directly to the application network stack without any Linux kernel overhead.

DPDK Application Acceleration

Typical network infrastructure packet sizes are small (64 bytes), but arrive at network interfaces at a very high rate, placing a major stress on data plane processing. Server packet sizes are typically large (1024 bytes) and therefore only arrive at interfaces at slower rates. For Gigabit interfaces on a system using interrupt-driven network drivers, the number of interrupts for received packets rapidly overwhelms the system. Implementing DPDK applications that use polled-mode network drivers provides a significant throughput improvement.

The DPDK libraries enable software tasks to be bound to a specific CPU core. This one-to-one mapping of software threads to hardware threads completely avoids the problem of excessive overhead for the Linux scheduler to switch tasks.

When system access to memory or PCIe is slowing CPU operation, the DPDK libraries can release bandwidth by processing four packets at a time and reduce multiple memory reads to a single read. The DPDK libraries can also be used to streamline memory access by aligning data structures to cache sizes and minimizing access to external memory. This means data can be pre-fetched before it is needed and the CPU does not have to wait.

Often an application requires access to shared data structures, which can result in a serious bottleneck. With DPDK libraries, you can implement optimized schemes and lockless queues that reduce the amount of data sharing.

Altogether, Intel DPDK delivers a powerful software model for application development that dramatically increases small packet throughput. In fact, systems based on Intel’s high-end processors have achieved Layer 3 forwarding rates of over 80 million packets per second (Mpps) for 64-byte packets.

SAU5081I Test Setup

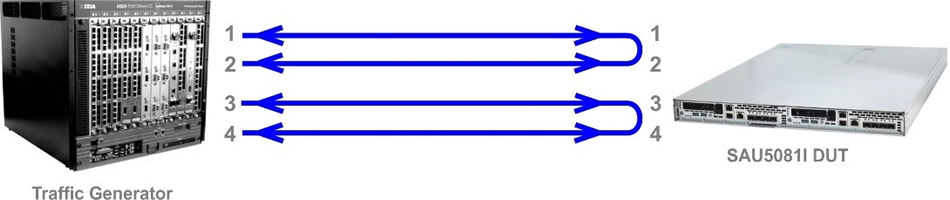

The objective of the test setup was to measure the DPDK throughput performance of the SAU5081I as compared to Linux-based bridging software.

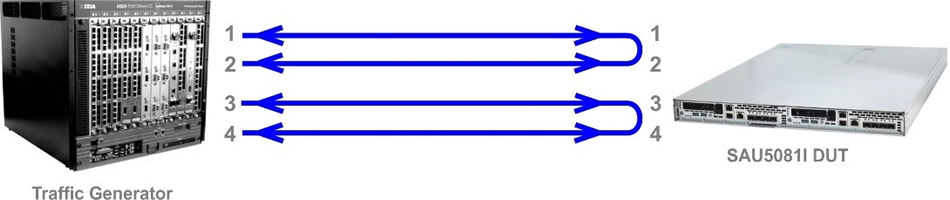

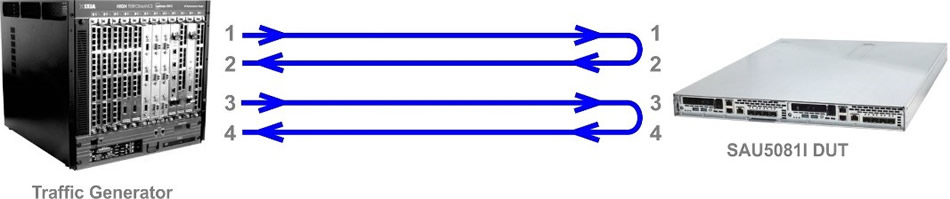

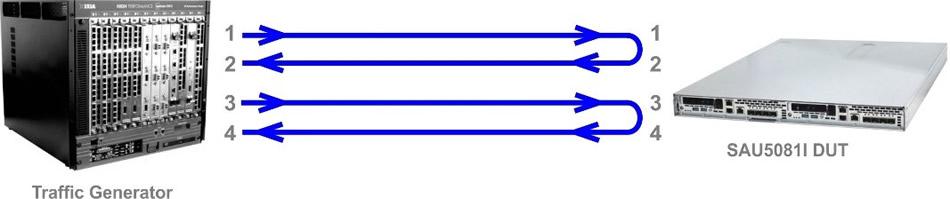

There were four setup configurations in the test using a SAU5081I server and a traffic generator.

The SAU5081I system was configured as follows:

| BIOS Version | SAU5081 V24 2016008111CP |

|---|---|

| Processor Version | Intel(R) Xeon(R) CPU E5-2620 v3 @ 2.40GHz |

| BIOS settings | Hyper-Threading [ALL] [Enable] Power Technology [Energy Efficient] |

| Processor Number | 2 |

| CPU Cores | 24 |

| Memory | 32G |

| Linux System | Ubuntu 15.04 desktop, with kernel 3.19.0-15 |

| DPDK Test Application | l2fwd |

The SAU5081I Linux host machine was running the DPDK L2 forwarding sample application, l2fwd, which is a simple example of packet processing using DPDK. The l2fwd application can operate in real and virtualized environments and is often used for benchmark performance tests using a traffic generator.

The l2fwd application forwards each packet received on a port to the adjacent port. This means that for the SAU5081I with quad SFP+ 10G ports installed, ports 1 and 2 forward to each other, and ports 3 and 4 forward to each other.

For comparison in the tests, throughput performance was also measured using the bridging utility included as part of the Linux kernel. The Linux bridging performs Layer 2 forwarding between ports on the host system. For one test case, the Linux bridging was “tuned” by binding interrupts for received packets on the same network interface to the same CPU core. The tuned Linux bridging provided the optimum performance case for Linux bridging.

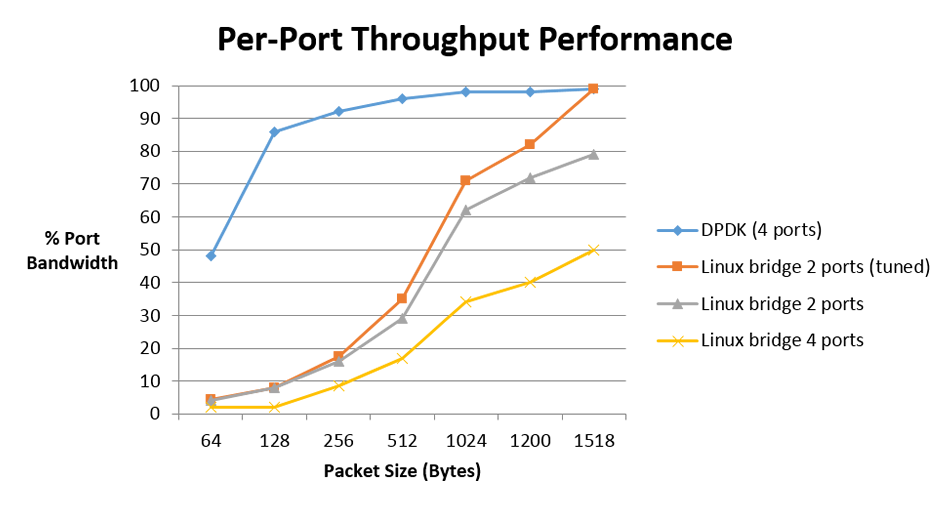

Server Packet Throughput with DPDK

Test results confirmed the performance boost of DPDK, particularly with packet sizes less than 1024 Bytes. The 10G port DPDK throughput on the SAU5081I remained well above 8 Gbps for packet sizes down to 128 Bytes, whereas Linux bridge performance fell to below 1 Gbps. That’s more than eight times the throughput! For 64-Byte packets, the DPDK throughput performance fell to 4.8 Gbps, but this was still an incredible ten times better than the Linux bridge (2 ports, tuned) throughput.

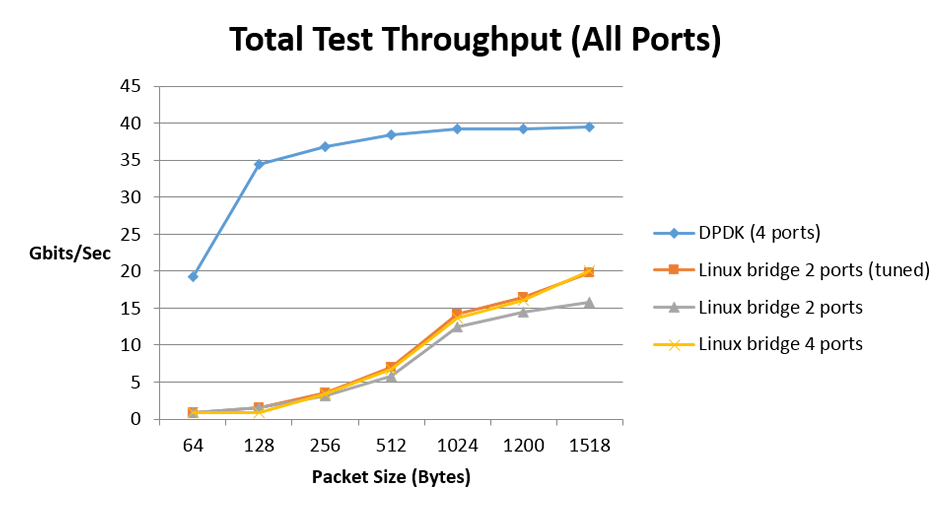

The test results for total port throughput (2 port or 4 port) reflect the same performance boost as the per-port throughput.

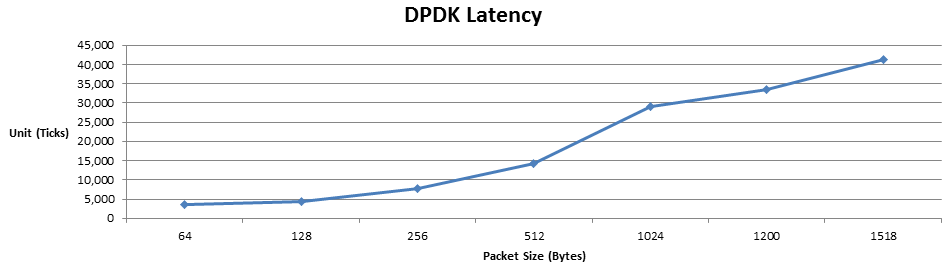

As a separate measurement, the DPDK packet latency was recorded to show the performance difference between packet sizes as they are forwarded through the server.

DPDK Boosts Cloud Server Throughput

Accton’s SAU5081I Cloud Server has been designed as an open high-performance platform based on standard Intel® x86 communications hardware. The implementation of Intel®’s DPDK on standard Intel® x86 CPU hardware platforms provides a proven throughput performance boost. Testing the packet throughput of a DPDK application running on the SAU5081I clearly shows that port throughput is improved by up to ten times for smaller packet sizes as compared to normal Linux bridge forwarding.

The impressive data plane throughput of DPDK on the SAU5081I server demonstrates that it is an excellent platform for building a wide range of high-performing network appliances. The SAU5081I server platform enables any available operating system and software applications to be installed, creating a customized flexible device that is a solid future-proof investment for next-generation networks.