AI-Driven Networking: From Manual to Autonomous Fabrics

Written by Ray H.

Published on Nov 12, 2025

How next-gen networks evolve, and how Accton helps you lead the change

Introduction Today’s enterprise and AI-centric networks are still rich in manual tasks: device configuration, patching, traffic routing tweaks, siloed management domains, reactive troubleshooting. At the same time, the demands placed on the network are intensifying: ultra-high throughput, elastic scalability, edge extensions, multi-cloud fabrics, AI workloads with unpredictable flows. The result? Traditional network operations simply can’t keep up. The shift we’re witnessing is from manually managed networks to autonomous network fabric, self-driving infrastructures that sense demand, think proactively, act automatically. For Accton, with its hardware heritage, open-network vision and composable architecture mindset, this transition is not just a trend, it’s a natural fit. “What if your network could reroute itself, heal itself, scale itself, whilst your team focuses on value rather than valves?”

Why the time is now.

Three major forces drive the need for autonomous fabrics:

- Surging complexity — Today’s network spans data centers, edge sites, multi-cloud domains, many vendors, hybrid infrastructures. Manual operations don’t scale.

- Demand for agility & scale — AI training clusters, real-time edge inference, 5G/6G deployments demand networks that respond dynamically, not through weeks of manual configuration.

- AI/analytics maturity in network operations — Advances in telemetry, ML/AI models, intent-based networking make the idea of a network that “acts itself” viable. For example, research defines autonomous networks as systems that “sense, think and act” with minimal human intervention.

Together, these drivers make the era of manual network operations look increasingly untenable.

What does an “autonomous fabric” look like?

An autonomous fabric embodies several characteristics:

- Intent-based networking: Business intents (e.g., “Ensure < 50 ms latency for inference traffic”) are translated into network configurations without low-level device commands.

- Closed-loop automation: Real-time telemetry flows in → AI/analytics processes that data → actions are triggered automatically (reroute, allocate, isolate).

- Self-healing & self-optimising: The network detects anomalies, optimises performance, applies remediation — all without waiting for a human ticket.

- Cross-domain, multi-vendor orchestration: The fabric spans access, aggregation, core, edge, and cloud — and integrates vendor-agnostic elements, so the network behaves as one adaptive system.

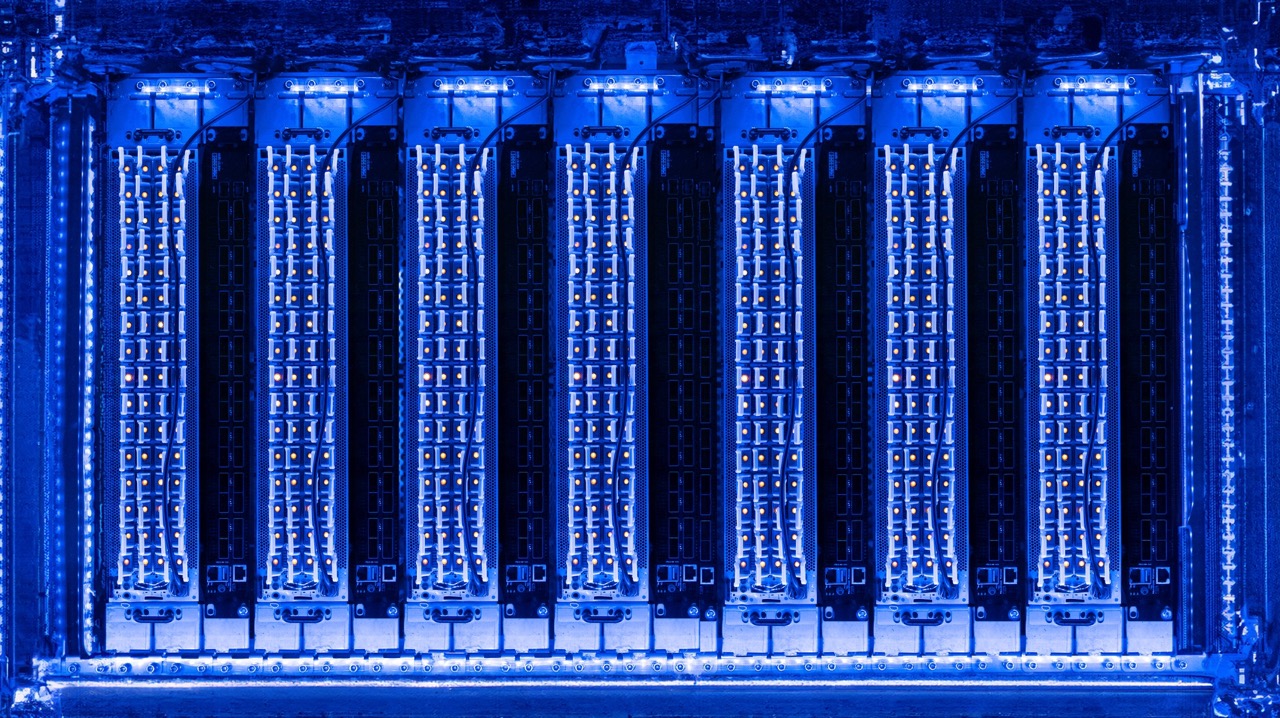

- AI-native infrastructure readiness: The fabric must support ultra-low latency, very high throughput, rich telemetry — characteristics that mesh well with Accton’s focus on high-performance data centre and edge networking.

Why this matters for Accton

For Accton and Edgecore, the move to autonomous fabrics presents a big opportunity:

- Your open networking / disaggregated hardware proposition means customers avoid vendor lock-in and are more agile — an essential enabler of autonomous fabric scenarios.

- Your high-performance switches / fabrics (e.g., 400G/800G readiness, large-scale data centre interconnect) align well with the demands of fabrics built for AI-scale workloads.

- Rich telemetry, programmable APIs and modular design become differentiators: the fabric illustrates that hardware + software must align.

- Your involvement in composable compute/disaggregated architectures (for example, via your Nexvec™ platform) means you’re not just talking about network switches — you’re talking about the compute-network-storage fabric as a whole. This full-stack viewpoint positions Accton as more than just “network hardware vendor”.

Mini checklist: How Accton can help you evolve your network

- Use open switches with programmable APIs and disaggregated control.

- Deploy modular, high-density fabric hardware that supports high-throughput, low-latency paths.

- Integrate telemetry at the hardware level: enable real-time data collection, normalisation and analytics.

- Adopt a phased approach to automation: from assisted → semi-autonomous → fully autonomous.

- Support edge + cloud + on-prem domains: future fabrics will span all three.

- Emphasise open-standards and vendor-agnostic designs: autonomous fabrics don’t want to be locked into siloed stacks.

Practical Use-Cases & Scenarios

AI training cluster in the data centre: Large-scale GPU/accelerator clusters generate huge traffic spikes and require highly optimised interconnects. An autonomous fabric can detect congestion or skewed flows and adjust paths/allocations dynamically.

Edge AI inference fabric: Thousands of edge nodes need connectivity, minimal latency, and dynamic scaling. Deploying an autonomous fabric at the edge lets you treat it as a fluid network: nodes come online, traffic patterns shift, the network adapts.

Multi-cloud connectivity & hybrid infrastructure: On-prem data centre → public cloud → edge sites. The fabric stitches them together, monitors across domains, enforces policy globally, adapts to business-intent changes.

Resilience & security fabric: The network detects anomalous traffic, isolates faults, reroutes around failures automatically — turning the network into a proactive defence layer rather than a passive one.

Roadmap & Implementation Considerations

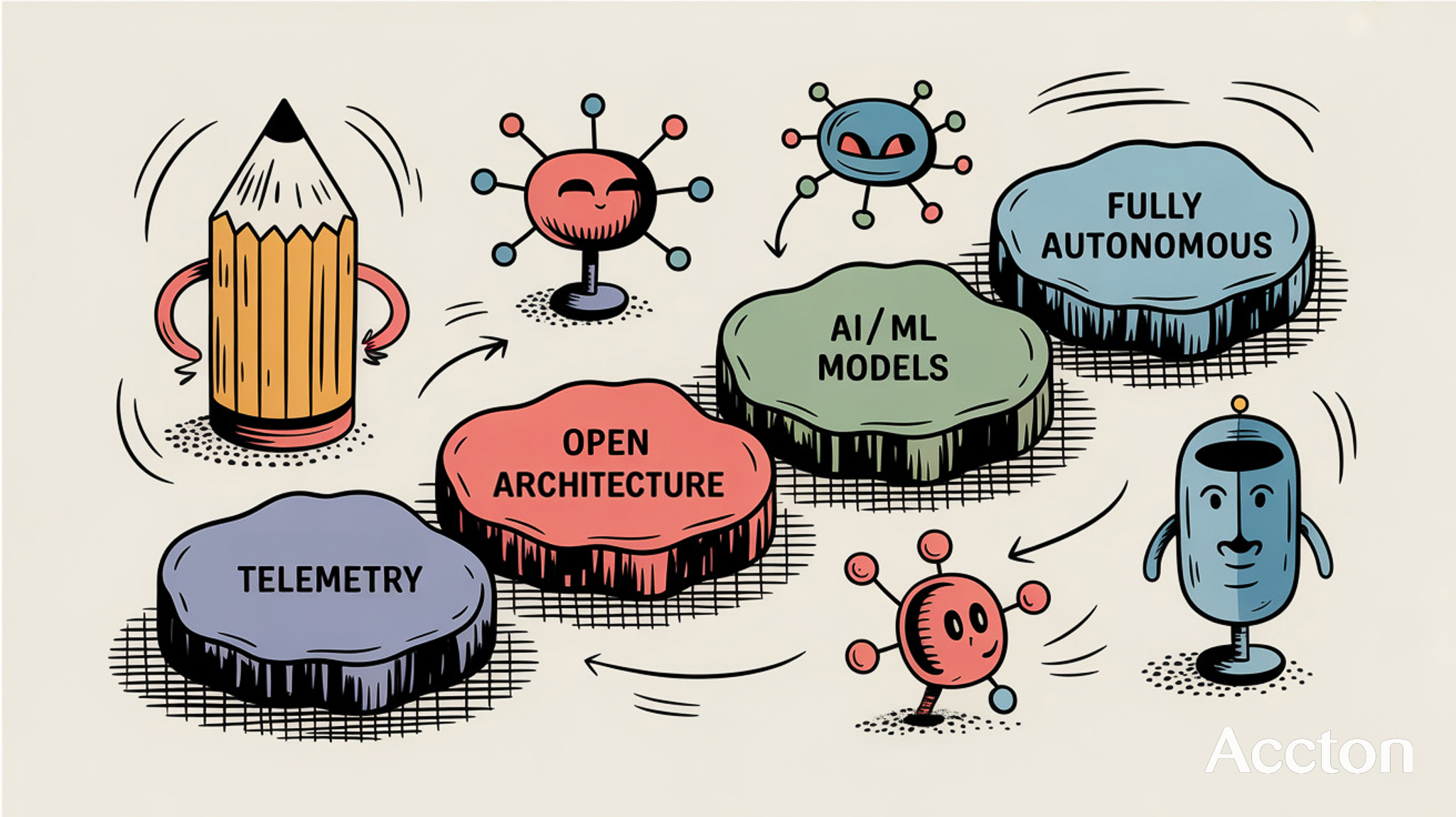

- Data & telemetry foundation first: Collect high-fidelity telemetry from devices across domains. Without good data you cannot build closed-loop AI.

- Modular, open architecture: Use hardware and software that support programmability, disaggregation and open APIs.

- AI/ML model deployment + closed-loop workflows: Identify use-cases (traffic engineering, anomaly detection, self-healing) and build the analytics/actions pipeline.

- Phased adoption: Start with assisted automation (recommendations), move to semi-autonomous (closed-loop in defined domains), then to fully autonomous (cross-domain intents). Standards like the ones from TM Forum describe this progression via levels 1-5.

- Change in skills & operations mindset: Network ops teams evolve from CLI/configuration to intent-definition and oversight of autonomous fabric behaviour.

- Edge + multi-domain integration: Ensure the orchestration spans the edge, on-prem, cloud and accounts for legacy systems.

- Governance, trust & transparency: Autonomous systems make decisions — you need explainability, monitoring, rollback paths and governance to build confidence.

Challenges & Risks

-

Garbage-in, garbage-out: Poor telemetry/data quality → poor AI decisions. Data normalisation remains a hurdle.

- Model scalability & complexity: Distributed fabrics generate huge volumes of telemetry; modelling and inference must scale.

- Trust / explainability: Business stakeholders must trust autonomous decisions. Without transparency, adoption stalls.

- Legacy systems & vendor silos: If parts of the network resist automation, the fabric is only as good as its weakest domain.

- Security & new attack surfaces: Autonomous behaviour can open new risks; the system itself must defend as well as operate.

- Standards & interoperability: Multi-vendor fabrics rely on open standards; fragmented stacks will hamper true autonomy.

🔗 Want to collaborate with us to build the future of AI-ready infrastructure?

Talk to an expert! Contact Accton today and let’s shape the next generation of sustainable data centers together.

You may also like

🔹 800G AI/ML Fabric 👉 https://www.accton.com/800g-ai-ml-fabrics/

🔹 Listen to our podcast 👉 https://takeabyte.buzzsprout.com/

🔹 Stay connected with us 👉 https://www.accton.com/newsletter/

🔹 Contact us 👉 https://www.accton.com/contactus/

Explore More Tech Corners

Explore more tech corners through our blogs—where we dive into the latest innovations, share expert insights, and break down complex technologies in a simple, engaging way.

November 12, 2025

Total Views: 220

October 1, 2025

Total Views: 1,258

April 25, 2025

Total Views: 2,720

January 31, 2025

Total Views: 3,279