AI Infrastructure’s Open Future

AI Increasingly Used for Many Complex Applications

Artificial intelligence (AI) has developed rapidly over recent years as powerful computing resources have become more readily available. This has enabled AI systems to be increasingly applied to many more commercial applications. In fact, it is most likely that you now use multiple forms of AI every day without even thinking about it.

Many AI systems these days are based on artificial neural network technology, a type of AI that is related to how the human brain works. Although constructed through software algorithms rather than physical neurons, all neural networks first have to learn a task before they can be applied to a problem. This “training” process requires large amounts of computing resources, which typically demands dedicated hardware to function efficiently. Once a trained neural network model has been achieved, the model can evaluate new data and make decisions to solve a task, a process called “inference.” The inference part of AI also has a need for specialized hardware, but the demands of AI inference are very different from training where a response to data is often required in real-time. As AI systems have evolved, so has the development of hardware for faster and more efficient training and inference. This AI accelerator hardware has now become an essential part of the AI infrastructure that is used for an expanding range of complex applications.

AI Deep Neural Networks: Training and Inference

A neural network consists of layers of interconnected artificial neurons. Each neuron in the network responds to the strength, or weight, of inputs it receives and then sends its own outputs to other neurons if the inputs are considered strong enough. A deep neural network has many layers of neurons that are interconnected and as data is fed into the network, an applied algorithm adjusts the weights of the connections until a correct result is attained. Complex tasks, such as processing languages, can involve hundreds of neuron layers with billions of connection weights between neurons.

The AI training process feeds a large known data set into a deep neural network until a desired result is accomplished. Feeding thousands of known data into a neural network and processing it all takes a considerable amount of time and consumes vast amounts of resources. How much training is done to develop a particular neural network model depends on the required level of accuracy. However, working models are often continuously refined as new data is identified and added to the training.

AI inference is the process of deploying a trained neural network model to make decisions as new unknown data is fed to it. Rather than needing huge amounts of computing power, inference is constrained by other parameters, such as response time, throughput, and often size and power consumption. For this reason, trained neural network models are typically optimized through the pruning of unused neurons and the reduction of the numerical precision of neuron connection weights (for example, from 32-bit to 8-bit). It is critical for inference performance to meet response-time requirements at an effective level of accuracy, and be able to handle specific workloads. Optimized neural network models are often applied to applications where a real-time response is required (typically less than 10 milliseconds), and therefore inference is deployed as multiple instances. That is, the inference needs to handle data from multiple input sources and this requires multiple hardware and software to process the workload.

Hardware Accelerators Boost AI Training and Inference

The training of artificial neural networks often takes place within data centers where there exists a huge amount of computing resources. However, most computing hardware in data centers is designed for general-purpose applications and is therefore inefficient for AI training. To increase performance, efficiency, and scalability, AI training needs dedicated hardware that “accelerates” the process. The adoption of accelerator hardware for AI training is now considered essential and this has driven a number of technology initiatives that are in a state of constant change and development.

The key element in AI training accelerators is how data is processed. General-purpose CPUs are designed to perform complex operations on serial data, whereas AI training requires GPUs that perform simple operations on parallel data. That is, high-end CPUs may have 48 processor cores that perform operations on data one instruction at a time, whereas GPUs may have as many as 40,000 processor cores that process an instruction on data simultaneously. For this reason, GPUs have been widely used for AI accelerator hardware because they are built on a parallel processing architecture. To achieve even better training performance, custom-designed AI chips that are based on parallel computing architectures, but also include high-bandwidth memory and other features are becoming increasingly deployed. The use of dedicated AI training hardware is many times faster and hundreds of times more energy-efficient than general-purpose CPU computing.

AI inference also has a need for accelerator hardware, but the requirements are quite different from that of AI training. Although inference for some applications is best implemented in a data center, there is often a need for inference to be performed at the network edge, which is close to the source of the data. For example, an inference accelerator would be needed in self-driving vehicles for a real-time response, or could be embedded in IoT devices or other hand-held devices. This means that in some cases inference accelerator hardware must be small in size, with low power consumption, and often at the lowest possible cost. The hardware typically requires high throughput and enough memory to contain the trained neural network model, with the data processing performed by standard CPUs or GPUs. However, for inference hardware at the network edge the need for low power and efficiency has led to the development of special-function ASICs that accommodate processing, memory, networking, and additional features in one chip.

Another requirement for AI accelerator hardware in a data center, either for training or inference, is the ability to scale-up and interconnect. Training accelerators must be able to scale-up to be able to process huge amounts of data, and inference accelerators must be able to scale to handle a large number of instances.

OCP’s Open Accelerator Infrastructure

To support the development of AI accelerator hardware, the Open Compute Project (OCP) formed the Open Accelerator Infrastructure (OAI) initiative to define specifications for modular, interoperable hardware that reduces the time and effort required for AI solution design. The OCP-OAI specification includes a rack-mountable chassis, a removable tray, and up to eight mezzanine accelerator modules that sit on an interconnect baseboard. The specifications allow for maximum flexibility in design by providing a wide range of options for interconnect standards, protocols, and topologies. This approach to the OAI system embraces not only current solutions, but also accommodates future developments as they rapidly emerge.

The OCP Accelerator Module (OAM) could be considered an upgrade from using PCIe cards as a standard form factor for AI hardware. The OAM mezzanine module (OCP Accelerator Module Design Specification v1.1) can include one or more ASICs or GPUs, although typical designs implement a single ASIC/GPU. The OAI Universal Baseboard (OCP Universal Baseboard Design Specification v1.0) can support up to eight OAMs and provides high-speed interconnection between OAMs in a number of different topologies. In addition, the OAMs also include a high-speed connection to a host server through the OAI system’s Host Interface Board.

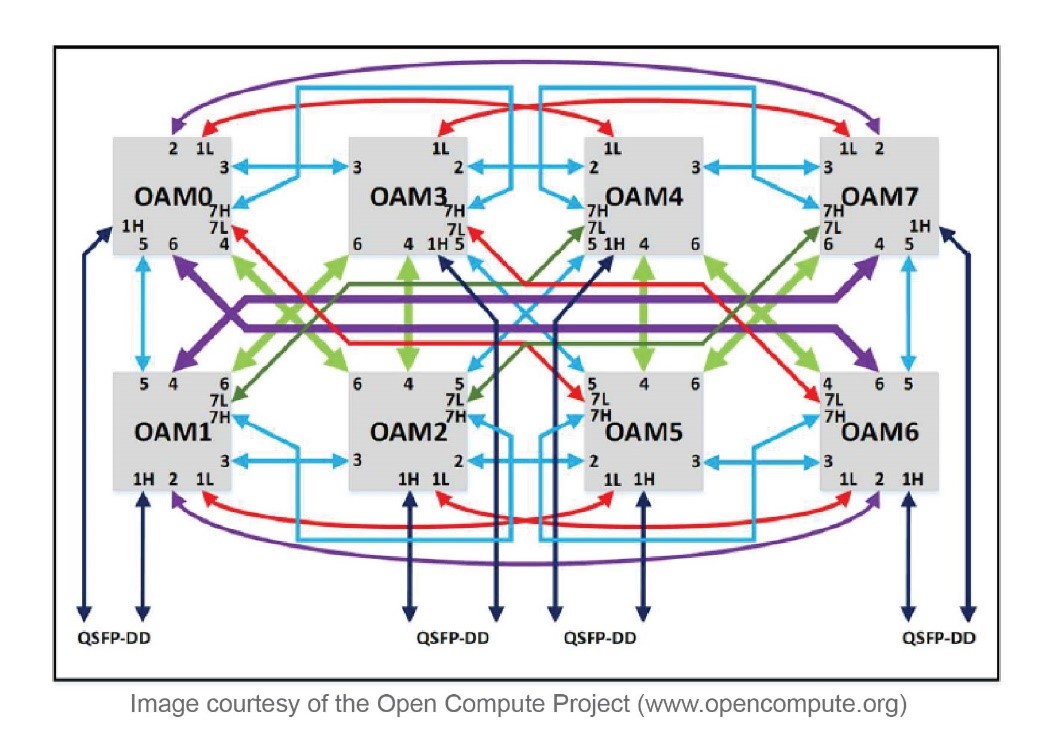

Each OAM supports seven 16-lane (x16) serializer/deserializer (SerDes) links that are designed for data rates up to 28 Gbps NRZ or 56 Gbps PAM4. The x16 SerDes links can be split into sub-links, such as 2 x8 or 4 x4, which allows for the different topology interconnections and scale-out connections. For example, consider the following example topology from the OCP Accelerator Module Design Specification that uses split x8 lanes to fully connect the OAMs and provide eight scale-out links through the QSFP-DD connectors. The split x8 port lanes are indicated as either the lower half (L) or higher half (H). In particular, the higher lanes of port 1 (1H) are those used for the scale-out expansion from each OAM.

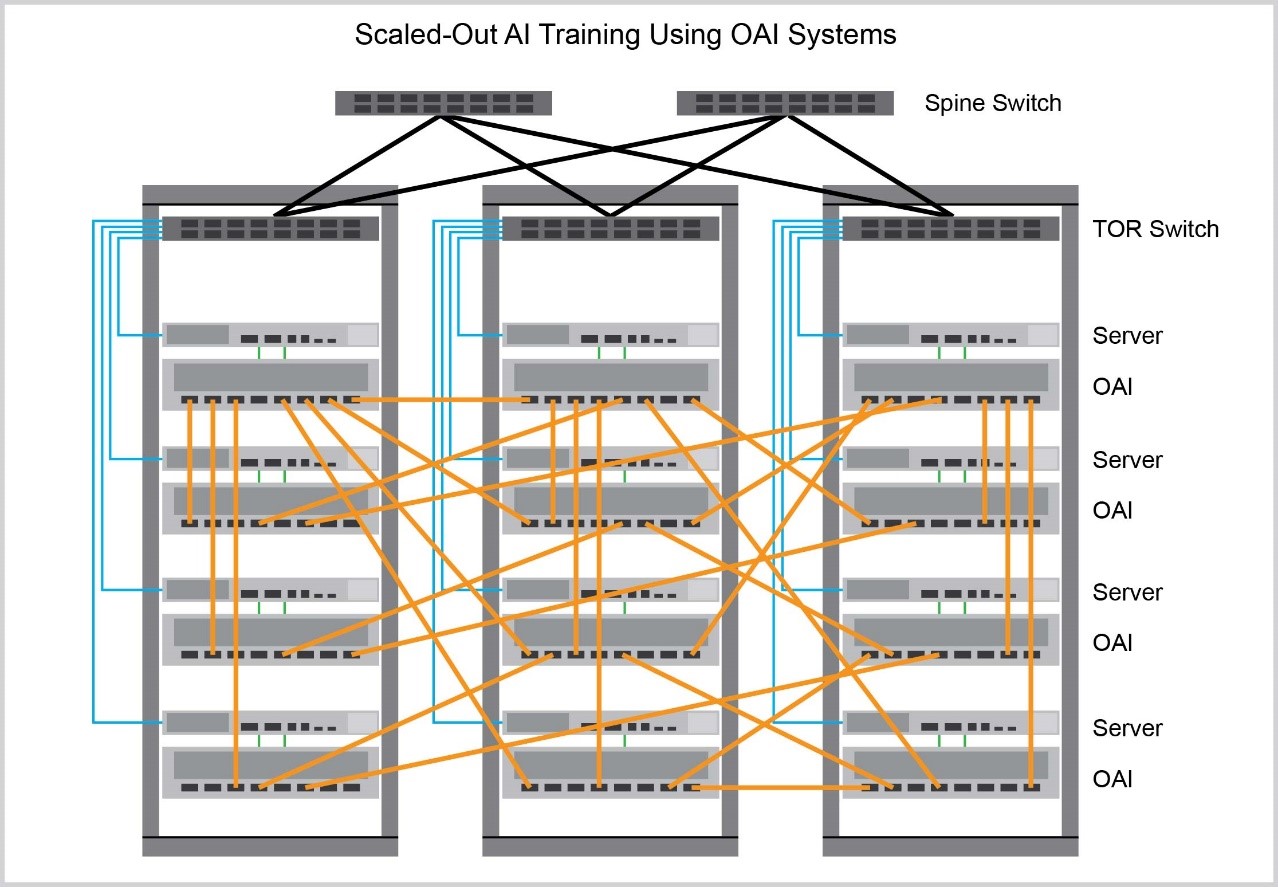

The OCP-OAI specification for the open accelerator system can be configured for AI training or inference and enables various scale-out capabilities. Although each OAI system contains up to eight interconnected accelerator modules, large-scale distributed training or multiple-instance inference needs connections across multiple systems. This is achieved through the high-bandwidth QSFP-DD connectors that can provide passive or active copper-cable connections to other OAI systems in the same rack or other racks. This scalability enables the construction of AI systems that are capable of processing hundreds of thousands of images per second, or handling tens of thousands of natural language sentences per second.

AI Hardware to Continue its Growth and Importance

As AI gains importance and is applied to more applications, the demand for AI accelerator hardware will continue to grow. Whether it is AI training in a data center or AI inference at the network edge, AI hardware will keep evolving, increasing in both processing power and efficiency. The OCP initiative to specify an open modular AI infrastructure that supports interoperability and flexibility has undoubtedly helped AI solution providers in their development efforts. As new AI accelerator hardware emerges, it can now be quickly deployed to power through the data of many new complex AI applications.